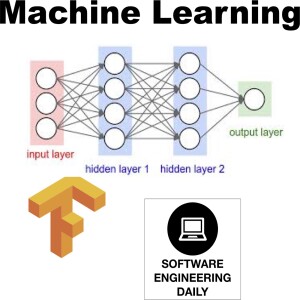

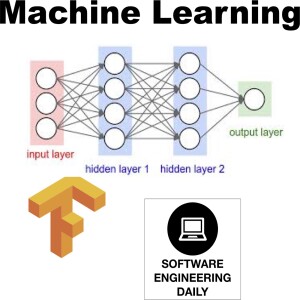

Machine Learning Archives - Software Engineering Daily

Deep Learning Hardware with Xin Wang

2018-01-29

Training a deep learning model involves operations over tensors. A tensor is a multi-dimensional array of numbers. For several years, GPUs were used for these linear algebra calculations. That’s because graphics chips are built to efficiently process matrix operations. Tensor processing consists of linear algebra operations that are similar in some ways to graphics processing–but not identical. Deep learning workloads do not run as efficiently on these conventional GPUs as

Continue reading...

Comments (3)

More Episodes

All Episodes>>Create Your Podcast In Minutes

- Full-featured podcast site

- Unlimited storage and bandwidth

- Comprehensive podcast stats

- Distribute to Apple Podcasts, Spotify, and more

- Make money with your podcast

It is Free